Threat Modeling AI Systems and Services: A Deep Dive

THREAT MODELINGSECURITYAI

Arron 'finux' Finnon

4/2/20256 min read

Threat Modeling AI Systems: Securing the Future of Intelligent Technology

Imagine a world where every decision, from healthcare diagnostics to autonomous driving, is powered by AI. This deep dive explores the critical need for robust threat modeling in AI — a practice that not only protects our systems but also builds trust in technology that is rapidly reshaping our lives.

The Imperative of AI Threat Modeling

1. Why Threat Modeling Matters for AI

AI is increasingly integrated into the core infrastructure of our society. With such pervasive influence, its security vulnerabilities become risks not just to data, but to the very decision-making processes that affect everyday life.

Trust and Reliability: Without proactive security, essential services can be disrupted, and decisions can be manipulated — undermining public trust.

Beyond Traditional Security: Conventional cybersecurity techniques, designed for static code, fall short when faced with AI’s dynamic, data-driven, “black box” nature.

Proactive Defense: Threat modeling helps anticipate potential attack vectors early in the AI development lifecycle, making prevention more efficient and cost-effective.

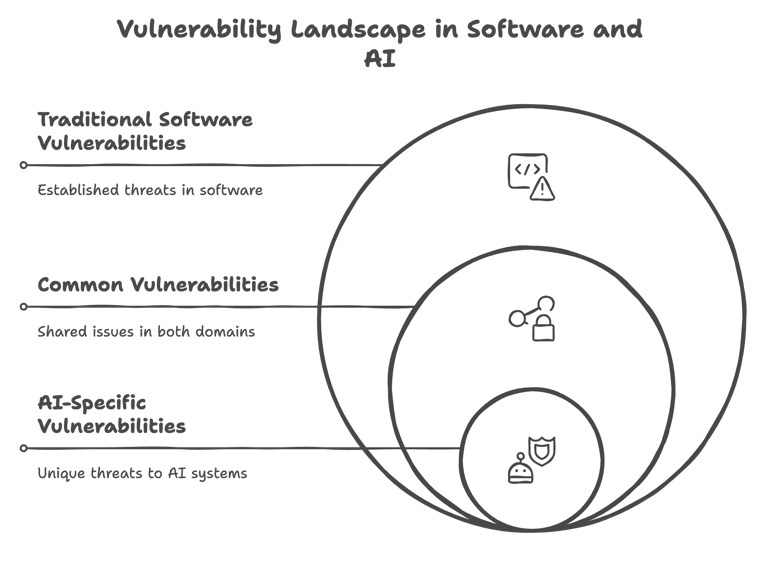

Understanding the Distinct Landscape of AI Security

1. AI vs. Traditional Software Vulnerabilities

Primary Assets:

Traditional Software: Code is king.

AI Systems: Data and models form the core, introducing risks like data poisoning, model theft, and adversarial manipulation.

Attack Surfaces:

Software: Susceptible to exploits like SQL injection or cross-site scripting.

AI: Vulnerable to sophisticated threats where the training data itself is the weak link — altered data can skew outcomes, and adversarial examples can trick models into misclassifying inputs.

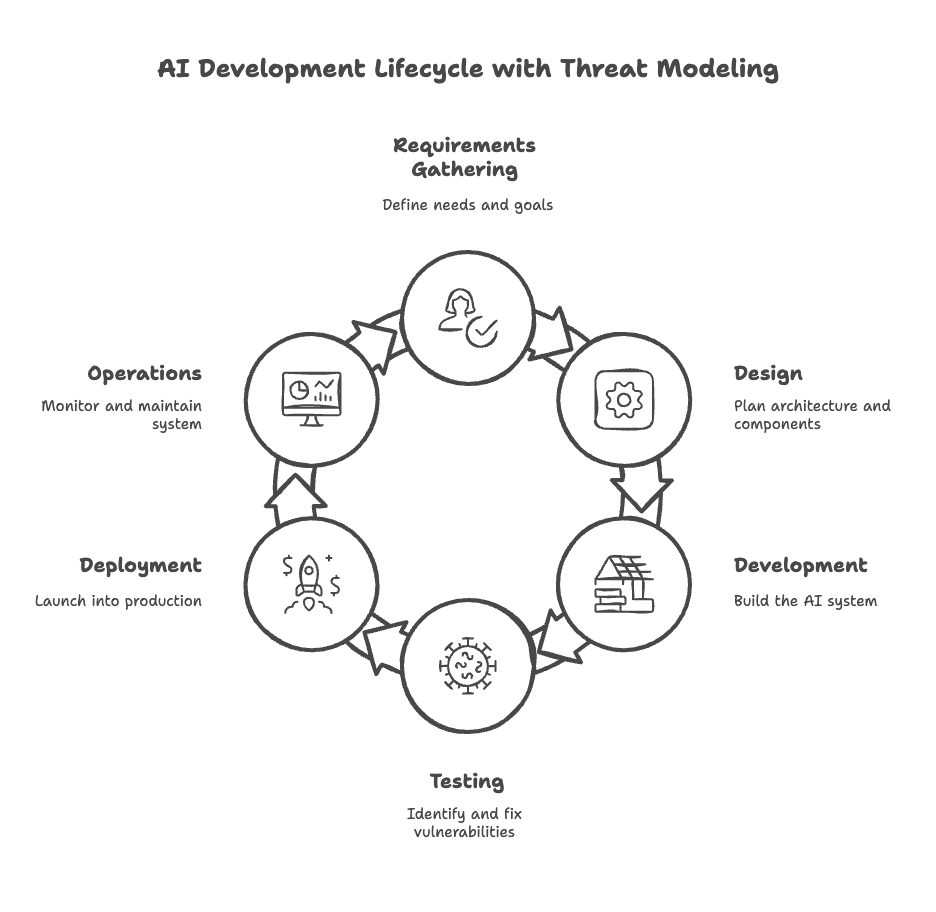

2. The AI Lifecycle and Supply Chain

Complex Ecosystem: From sourcing and curating data to training and deployment, each step introduces potential vulnerabilities.

Third-Party Risks: Reliance on open-source models, public datasets, and external libraries expands the attack surface, demanding tailored security measures.

Data as the Lifeblood: Integrity, provenance, and confidentiality of data are paramount — if compromised, the AI’s decisions become unreliable.

3. Challenges of Data-Centric Threats and Model Opacity

Black-Box Complexity: Unlike easily inspectable code, AI models operate as opaque systems with millions of parameters, making manual review nearly impossible.

Predicting Failures: Emergent properties in pre-trained models lead to unpredictable failure modes, requiring innovative approaches for testing and validation.

The “Why” of AI Threat Modeling: Consequences and Limitations

1. Consequences of Neglect

Widespread Impact: Data breaches, biased outputs, and system manipulations can lead to dire outcomes, from misdiagnosis in healthcare to unsafe autonomous vehicles.

Reputational Damage: Beyond technical failures, compromised AI can erode trust, causing long-term damage to organizations and industries.

2. Limitations of Conventional Security Practices

Mismatch of Techniques: Tools like static code analysis are insufficient against dynamic AI systems that learn and evolve.

Need for Specialized Controls: Preventing attacks such as data poisoning or model theft requires tailored methodologies that extend beyond traditional frameworks.

3. Proactive Benefits of Threat Modeling

Early Integration: Embedding threat modeling in the AI development lifecycle leads to early identification of vulnerabilities.

Collaborative Security Culture: Involving developers, architects, and security experts promotes a proactive, security-first mindset, enhancing overall resilience.

Detailed Frameworks for AI Threat Modeling

1. Traditional Models Reimagined for AI

STRIDE and Its Evolution: Originally a methodology for traditional software, STRIDE is being adapted to address AI’s unique risks by aligning threats like data poisoning with classic categories such as tampering and information disclosure.

2. AI-Specific Frameworks

MAESTRO:

Focus: Agentic AI systems.

Approach: Layered analysis of multi-agent architectures and their interactions.

PLOT4ai:

Focus: Responsible AI with a strong emphasis on privacy and ethics.

Features: A comprehensive library of AI-specific threats and collaborative threat modeling techniques.

MITRE ATLAS:

Focus: Adversarial AI threats.

Strengths: Provides a structured knowledge base of adversary tactics, techniques, and case studies, extending the familiar MITRE ATT&CK framework to the AI realm.

3. Integrating Adversarial Machine Learning (AML)

AML Concepts: Techniques such as adversarial training enhance model resilience by exposing AI systems to manipulated inputs during training, teaching them to recognize and resist adversarial attacks.

The Practical Steps of AI Threat Modeling

1. Defining Scope and Objectives

Clarity in Focus: Start by mapping the entire AI system, including data flows, components, and interactions.

Setting Goals: Establish clear security objectives — what needs protection and why, whether it’s safeguarding sensitive data or ensuring the accuracy of model predictions.

2. Identifying and Categorizing Assets

Data: Catalog every form of data used — from raw training sets to live operational data.

Models: Document the trained AI models and their underlying algorithms.

Infrastructure: Map out the hardware, cloud services, libraries, and APIs supporting the AI system.

Interactions: Identify every point where users and systems interface with the AI.

3. Discovering and Analyzing Threats

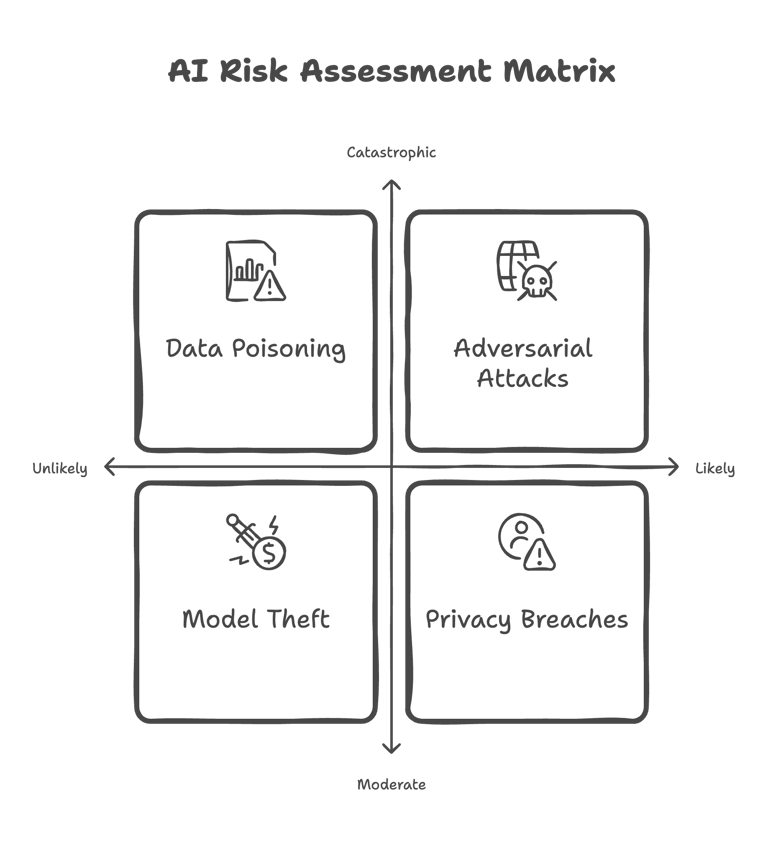

Brainstorming Attack Vectors: Consider both generic IT threats and those unique to AI — data poisoning, adversarial attacks, model theft, and privacy violations.

Leveraging Threat Intelligence: Utilize public threat databases and frameworks like MITRE ATLAS to enrich your analysis.

4. Risk Assessment

Likelihood and Impact: Use methodologies such as DREAD (Damage potential, Reproducibility, Exploitability, Affected users, Discoverability) to rate each threat.

Prioritization: Focus efforts on threats with the highest risk and potential impact.

5. Mitigation Strategies and Security Controls

Targeted Actions: Develop specific countermeasures for each identified risk, from robust input validation and data sanitization to advanced anomaly detection and access controls.

Iterative Process: Recognize that threat modeling is ongoing — continually update the model as the AI system evolves and new threats emerge.

Best Practices and Continuous Adaptation

1. In-Depth System Understanding

Document Everything: Use detailed diagrams to capture the flow of data and interconnections within your AI system.

Focus on Provenance: Track data origin and evolution to prevent tampering and ensure integrity.

2. Infrastructure and Deployment Security

Holistic Approach: Secure not just the AI models, but also the underlying hardware, cloud services, and software libraries.

Vendor and Third-Party Vigilance: Monitor the security of open-source components and external dependencies.

3. Ethical Considerations and Unintended Consequences

Fairness and Bias: Integrate bias detection mechanisms and ensure that the AI does not inadvertently cause harm or discrimination.

Responsible Deployment: Balance security with ethical implications to create systems that are both safe and socially responsible.

4. Continuous Monitoring and Adaptation

Dynamic Threat Landscape: Regularly review and update threat models to keep pace with the evolving nature of AI technology and cyber threats.

Feedback Loops: Use real-world insights and incident analyses to refine security measures continuously.

Real-World Case Studies: Lessons Learned

1. Data Poisoning Incidents

Example: The “Nightshade” tool subtly altered images that, when scraped for training data, led to misclassifications — illustrating how even minor manipulations can derail an AI system’s behavior.

Implications: Reinforces the need for rigorous data validation and continuous monitoring.

2. Adversarial Attacks in Practice

Scenario: Autonomous vehicles misinterpreting traffic signs due to adversarial modifications highlight the tangible risks in critical applications.

Takeaway: Security measures must evolve to detect and mitigate adversarial inputs in real time.

3. Model Theft and Intellectual Property Risks

Case Study: Incidents of model extraction — ranging from repeated query analysis to electromagnetic signal capture — demonstrate the financial and competitive risks associated with stolen AI models.

Lesson: Implement robust safeguards to protect proprietary algorithms and training processes.

4. Privacy Breaches Involving AI

Incident: Leaks resulting from mishandled data in systems like chatbots underscore the importance of maintaining strict confidentiality protocols and securing data pipelines.

Result: Organizations must adopt comprehensive privacy measures tailored to the AI environment.

Conclusion: A Call to Build Resilient AI Systems

In an era where AI governs critical aspects of modern life, threat modeling is not a luxury — it’s a necessity. By taking a comprehensive, layered approach to security, organizations can build resilient AI systems that not only defend against known threats but also adapt to new challenges as they emerge.

This in-depth exploration reinforces that deep, detailed engagement with your material is key. For readers committed to understanding every facet of AI threat modeling, this article is structured to offer both a high-level overview and the granular insights necessary for thorough comprehension.

Remember: The future of AI security depends on our ability to integrate detailed, proactive threat modeling into every stage of development — ensuring that our intelligent systems remain safe, trustworthy, and capable of transforming our world for the better.

Call to Action

Cybersecurity is essential for protecting your business, your customers, and your reputation in today’s digital world. Whether you’re just starting to think about security or looking to strengthen your defences, a free 30-minute consultation is the perfect first step.

During this session, we’ll:

Discuss your unique business needs and challenges.

Explore how a tailored AI Threat Model can help identify vulnerabilities and create a roadmap for improvement.

Provide actionable insights to help you start building a more secure future.

Don’t wait until it’s too late — book your consultation now! Simply visit my calendar to choose a time that suits you. Let’s work together to ensure your business is prepared for whatever comes next.

Click Here to Schedule Your Appointment

SecurityWithFinux.com

Cyber Security Solutions by

Arron 'finux' Finnon

Services

© 2025. All rights reserved.

Impressum | Privacy Policy